GDC 2009: The Year We Went Hungry

I’ve written before that at SIGGRAPH you starve while at GDC they stuff you like a foie gras goose. No more. This year there were no breakfast pastries before the lectures started. There were no cookies at the coffee breaks. Some lines ran out of lunches and sodas. Perhaps CMP took a look at the girth of the attendees and decided that the average game developer would benefit from a diet.

The lean times extended to the recruiting floor. It was obvious looking at the career expo that, despite rumors to the contrary, our industry is far from recession proof. There looked to be about half as many booths as there were last year.

Recruiting aside, GDC looked as crowded as ever–which is pretty impressive considering that attendance last year was 18,000 developers. Unfortunately, this whole paragraph from my write-up last year is still accurate:

GDC hasn’t managed to scale to handle its growth. Internet connectivity was poor throughout the convention center…. proceedings didn’t become available at all until after the conference, and those in the form of a slim handful of PowerPoint decks. The clever people have taken to bringing cameras to the talks and snapping pictures of each slide that goes up, which is distracting but understandable. The state of the GDC proceedings is an embarrassment to the industry, but it’s one that I don’t expect to change unless CMP stops handing out Gigapasses to speakers who show up without their slides.

This year, even AT&T’s 3G network was overwhelmed. I had to switch my phone back to the EDGE network to get any bandwidth. 18,000 geeks use a lot of bandwidth.

What’s hot: SPU task profiles. Screen-space postprocessing. iPhones. AI. Larrabee. Producers explicitly defending crunch. Margaret Robertson.

What’s not: Spherical harmonics (still). Every mobile phone except the iPhone. Scrum (it’s lost its New Process smell… crunch is the new scrum). The 7 to 9 scale. OnLive.

The Race for a New Game Machine

“I always envied the guys who wrote the software that would power the PlayStation 3.” (p. 58)

“I always envied the guys who wrote the software that would power the PlayStation 3.” (p. 58)

The Race For a New Game Machine, by David Shippy and Mickie Phipps, is a clumsily-written and frustratingly vague book, but game developers should read it anyway.

Shippy led the team that developed the PowerPC core common to the PS3’s Cell processor and the Xbox 360 CPU. Although his subordinate Mickie Phipps is given equal authorial credit, Race is written entirely in Shippy’s voice. Shippy was middle management, two levels up from the engineers designing the logic and circuitry of the processor and two levels down from the corporate VPs negotiating with Microsoft and Sony. As a result the book mixes corporate politics and technical detail. Unfortunately it rarely gives a satisfying dose of either.

“When the [Halo 3] player swings the joystick and levels a weapon at a charging alien beast,” Shippy writes in his introduction, “then presses the button and showers it with lead, splattering it straight back to hell, the quality of the experience depends less on the fancy code written by the people at Microsoft than on the processor brains in the chip inside the box.” I’m a software developer, so my biases are obvious, but the primacy of hardware strikes me as a difficult argument to make. There are plenty of games available for the Xbox 360 that most players would agree don’t come close to the “quality of the experience” delivered by Halo, though they run on the same platform. And consumers voting with their money seem to think that Wii Fitness delivers a higher-quality experience than Halo does, on hardware with a fraction of the processing power. I’d argue that hardware has been a commodity for some time, that game code is in the process of becoming a commodity, and that for a game to differentiate itself in the market today, it has to do so in the quality of its creative attributes: art direction, story and game design.

Unfortunately Shippy has a habit of resorting to purple prose at the cost of clarity and accuracy. Consider his description of the history of electronic gaming:

Throughout the 1970s and 1980s the PC industry skyrocketed, too, providing the perfect low-cost platform for game developers. They capitalized on each step up in the PC’s speed or bandwidth, each increase in the size of its memory or access time, and every decrease in power consumption. Graphics engines (separate chips that could handle the graphics creation for the games) allowed the PC’s microprocessor to focus on the calculations to create characters that move and behave more like real people and environments that are more intense…. The rise in the PC games market dealt a near death blow to the game console industry. By the early 1980s, even with new models still on the shelf, consumers were rapidly losing interest in the aging Atari game machine, and no heir-apparent was in sight…. It took nearly a decade, but the game console market suddenly bounced back from the dead with the launch of Sony’s Playstation in 1994…

In the space of a page, Shippy gives the impression that

- Consumer PC 3D graphics cards existed in the eighties

- PC game developers care about power consumption

- Gaming PCs with graphics and sound acceleration killed the Atari 2600

- The Nintendo NES never existed

Coming from a pair of engineers, such technical imprecision is astonishing. The clumsiness extends to the authors’ use of language as well. They “hone in on” solutions and “flush out ideas.” Shippy interrupts a discussion of logic changes with the tortuously mixed metaphor, “In other words, there would be road construction to add new highways, and the traffic cop would have a lot more balls up in the air to juggle.”

But if a good copy editor could have helped the writers with their wording, he would have been powerless to correct their technical sloppiness. For example, Shippy provides the oddest description of a game’s update-render loop that I’ve ever heard:

Although it may seem like magic to most people, game code is really quite simple. It can fundamentally be broken down into three major options. First it requires incoming streams of large amounts of data generated from a peripheral such as a joystick, controller, or keyboard. This is called “read streaming.” Second, that incoming data stream has to be processed by one or more numeric engines. Finally, the data processed in the numeric engines has to be streamed back out to the video display to create the colorful graphics. This is called “write streaming.”

I’ve worked as a software engineer on games for more than a decade, most of that in core technology, and I’ve never heard of “read streaming” or “write streaming” before. Controller inputs provide a trivially small amount of data. (That’s why networked real-time strategy games tend to sync inputs and run deterministic simulations instead of syncing all game state.) Write streaming, or “rendering” as we call it in the biz, is more memory-bandwidth intensive, but Shippy confuses matters by blurring the line between operations performed on the CPU and operations performed on the graphics processor.

Whether Shippy intends “write streaming” to refer to the transfer of data from CPU to GPU or from the GPU to the framebuffer, it accounts for a small fraction of a frame. Most of the CPU processing time for any game is spent marshaling data and performing general-purpose logic, with smaller portions of the frame devoted to number-crunching for physics, animation or graphics. Or as Chris Hecker puts it, “graphics and physics grind on large homogenous floating point data structures in a very straight-line structured way. Then we have AI and gameplay code. Lots of exceptions, tunable parameters, indirections and often messy. We hate this code, it’s a mess, but this is the code that makes the game DIFFERENT.”

You have to wonder if the difference between Hecker’s understanding of how a frame loop operates and Shippy’s is part of the reason why the PS3 contains a CPU optimized for stream processing rather than one optimized for messy game logic. And puzzles like this are the reason why The Race for a New Game Machine is worth reading despite its flaws. The book tells a story, and if it’s awkwardly written and frustratingly vague, it also offers occasional fascinating insights into how the consoles on which we develop were created.

As the book relates, the Power core used in the Xbox 360 and the PS3 was originally developed in a joint venture between Sony, Toshiba and IBM. While development was still ongoing, IBM–which retained the rights to use the chip in products for other clients–contracted with Microsoft to use the new Power core in their console. This arrangement left Sony engineers in an IBM facility unknowingly working on features to support Sony’s biggest competitor, and left Shippy and other IBM engineers feeling conflicted in their loyalties.

Race paints a striking picture of the different corporate cultures at Sony and Microsoft. Sony comes across as a vast impersonal hierarchy ruled by the impulses of Ken Kutaragi, then head of Sony Computer Entertainment. Most of the high-level innovations of the Cell processor are attributed to Kutaragi. So are other strange and whimsical mandates. At one point Kutaragi demands that the Cell must have eight Synergistic Processing Units, rather than the originally agreed-upon six, because “eight is beautiful.” IBM complies and produces a chip with eight SPUs. (The book doesn’t mention that, as Wikipedia points out, the PS3 only has “six accessible [SPUs] … to improve production yields.” It also never mentions that the 4 GHz core Shippy set out to design became a 3.2 GHz core in the shipping consoles, presumably also to improve yields.)

Microsoft is the agile hare to Sony’s tortoise. Individual Microsoft engineers come across as having more initiative–and a certain cockiness to go with it–but their desires are less grandiose than Kutaragi’s and they seem to have a better idea what they want. Microsoft is focused on software and services. They want simpler hardware than Sony’s Cell processor and they’re flexible in cutting features as long as they make their intended ship date. Because they’re developing a processor more similar to an off-the-shelf PowerPC chip, Microsoft is able to put development kits in the hands of game developers a year and a half before Sony. Because they control their manufacturing risks, they’re able to put final hardware on shelves a year before Sony.

For readers who are more familiar with the design of software than hardware, The Race for a New Game Machine offers a window into a strange parallel world where much is intriguingly similar and some things are very different. In the early days of development on the new Power core, Shippy describes junior engineers “chomping at the bit to dive into the detailed implementation” before the high-level design is done, an experience familiar to most experienced software developers. But a few pages later, Shippy tells how a “junior engineer’s face lit up with enthusiasm” at the idea of getting a new idea patented. Software patents are anathema to most game developers! The technical work the book describes is a mix of logic design, which sounds familiar to anyone used to writing code, and physical layout, which sounds more like playing SimCity as the chip designers map that logic onto actual circuits on the smallest chip possible. Finally, Shippy’s description of the brutal crunch engineers experienced in finishing the chip’s design will be all too familiar to anyone who’s shipped a game.

One of the unnecessary risks Sony took on in developing the PS3 was an attempt to build their own graphics processor. Toward the end of the Cell’s development, Shippy writes, “the graphics chip designed by the Sony engineers was slipping schedule. Sony had designed simple graphics chips in the past, but it was clearly not their area of expertise. After many months of schedule slips, it became obvious that Sony could not deliver the promised graphics chip.” This story raises as many questions as it answers. Was Sony trying to develop a full GPU, or merely developing a rasterizer in the assumption that the Cell’s SPUs could handle vertex transformation? If the latter, did the graphics processor fail to perform, did the SPUs, or could the platform not transfer data from SPUs to processor fast enough?

The story raises as many questions as it answers. But if The Race for a New Game Machine hadn’t been written, we wouldn’t know to ask those questions at all. We’re better off for having read the book. It’s just a shame the book’s not better.

Good Middleware

Love is patient, love is kind. It does not envy, it does not boast, it is not proud. It is not rude, it is not self-seeking, it is not easily angered, it keeps no record of wrongs. Love does not delight in evil but rejoices with the truth. It always protects, always trusts, always hopes, always perseveres.

1 Corinthians 13

Love is easy. Middleware is hard.

We used middleware on Fracture for physics, tree rendering, audio, animation, facial animation, network transport, and various other systems. Now that the game is finished, we’re taking stock of the lessons that we learned and deciding what packages we want to keep and what we want to replace as we move forward.

Middleware offers two main benefits, each of which is balanced by an associated cost:

1. Middleware provides you with more code than you could write yourself for a fraction of what it would cost you to try. No matter how clever you are, that’s how the economics of the situation work. The costs of developing a piece of software are largely fixed. But once written, any piece of software can be sold over and over again. Because they can spread their costs over a large number of customers, middleware vendors can afford to keep larger, more experienced teams working on a given piece of functionality than independent game developers can. During Fracture, we watched a couple of pieces of immature middleware spring up after we’d written similar tools ourselves. We watched those pieces of middleware grow and surpass our tools, because we couldn’t afford to devote the resources to our tools that the middleware vendors devoted to theirs.

The corresponding cost is that none of the functionality you get is exactly what you would have written yourself, and much of it will be entirely useless to you. Middleware is written to support the most common cases. If you have specific needs that differ from the norm, you may be better off implementing a solution yourself.

2. Middleware offers structure. Middleware draws a line between the things that you have to worry about and the things you don’t. As long as it’s reasonably well documented and stable, you don’t need to waste mental bandwidth worrying about the things that go on underneath your middleware’s public API. As games grow ever-larger and more complex it’s become incredibly valuable to be able to draw a line and say, The stuff on the other side of that line isn’t my responsibility, and I don’t have to worry about it.

The associated cost is that you can’t change what’s on the other side of that line. If you’re going to use middleware, you have to be willing to accept a certain amount of inflexibility in dealing with the problems that the middleware solves. You have to be willing to shape your own technology to suit the third-party libraries that you’re buying. Trying to do otherwise is a recipe for misery.

Given those benefits and costs, it makes sense to use middleware wherever you can–as long as you don’t try to license technology in the areas where you want your game to be unique. Every game has certain unique selling propositions: things that make it distinct from every other game on the market. Likewise, every game has certain characteristics that it shares with many others. When it comes to the latter, you should buy off-the-shelf technology, accept that technology’s inflexibility, and modify your game design to suit it. When it comes to the former, you should write the code in-house. As Joel Spolsky says, don’t outsource your core competency.

So now that you’ve figured out which game functions you should be implementing through middleware, how do you decide which of the scads of available middleware packages is best for you? There are a number of issues to keep in mind.

Good middleware lets you hook your own memory allocator. If your approach to memory is to partition the entire free space up front and minimize runtime allocations, then you’ll want the ability to reserve a block for your middleware and allocate out of that. Even if you allow dynamic allocations at any time, you’ll want to track how much memory is used by each system and instrument allocations to detect memory leaks. Any piece of middleware that goes behind your back and allocates memory directly just isn’t worth buying.

Good middleware lets you hook your own I/O functions. Most games store resources in package files like the old Doom WAD files. Middleware that doesn’t let you hook file I/O doesn’t let you put its resources in packfiles. Many modern games stream resources off DVD as the player progresses through a level. If you can’t control middleware I/O operations, then you can’t sort file accesses to minimize DVD seeks. You’ll waste read bandwidth and your game will be subject to unpredictable hitches. Again, any piece of middleware that does file I/O directly just isn’t worth buying.

Good middleware has extensible functionality. No middleware package will do everything you want out of the box. But you shouldn’t have to modify any piece of middleware to make it do what you need. Good middleware offers abstract interfaces that you can implement and callbacks that you can hook where you need to do something unique to your game. An animation package may let you implement custom animation controllers. A physics package may let you write your own collision primitives. Your objects should be first-class citizens of your middleware’s world.

Good middleware avoids symbol conflicts. Beware of middleware that uses the Standard Template Library carelessly. It’ll work fine until you try to switch to STLPort or upgrade to a new development environment, and then you’ll suddenly find that your engine has multiple conflicting definitions of std::string and other common classes. To avoid symbol clashes, every class in a middleware library should start with a custom prefix or be scoped inside a library namespace. And if a piece of middleware is going to use the STL, it should do so carefully, making sure that every STL class instantiated uses a custom allocator. That allows you to hook your own memory allocator and avoids symbol conflicts.

Good middleware is explicit about its thread safety. We live in an ever-more-multithreaded world, but one where most game engines are still bound by main thread operations most of the time. For best performance, you want to offload any operations you can onto other threads. To do that with a piece of middleware, you need to know which operations can be performed concurrently and which have to happen sequentially. Ideally a piece of middleware will let you create resources asynchronously so you can construct objects in a loader thread before handing them off to the game.

Good middleware fits into your data pipeline. Most companies export data in an inefficient platform-independent format, then cook it into optimized platform-specific formats and build package files out of those as part of a resource build. Any piece of middleware should allow content creators to export their assets in a platform-independent format. Ideally, that format should still be directly loadable. The build process should be able to generate platform-specific versions of those assets using command-line tools.

Good middleware is stable. One of the main benefits of middleware is that it frees your mind to focus on more critical things. In this respect, middleware is like your compiler: It frees you from having to think about low-level implementation details–but only as long as you trust the compiler! A buggy piece of middleware is a double curse, because instead of freeing your attention from a piece of functionality, it forces you to focus your attention there, on code that was written by a stranger and that no one in your company understands. Worse still, if you’re forced to make bug fixes yourself, then you need to carry them forward with each new code drop you get of your middleware libraries. You shouldn’t need to concern yourself with the implementation details of your middleware. Middleware is only a benefit to the extent that its API remains inviolate.

Good middleware gives you source. Despite the previous point, having access to the source for any middleware package is a must. Sometimes you’ll suspect that there’s a bug in the middleware. Sometimes you need to see how a particular input led to a particular result before you can understand why the input was wrong. Sometimes you’ll have to fix a bug no matter how good the middleware is. And frequently you’ll need to recompile to handle a new platform SDK release or to link with some esoteric build configuration.

There are other questions to ask about any piece of middleware: How much memory does it use? How much CPU time does it require? What’s the upgrade path for your current code and data? How does it interact with your other middleware? How good is the vendor’s support? How much does it cost?

But those questions have vaguer answers. Acceptable performance or cost will vary depending on the nature of your product. A cell phone game probably can’t afford to license the Unreal Engine–and probably couldn’t fit it in available memory if it did! Data upgrade paths are less of a concern if you’re writing a new engine from scratch than if you’re making the umpteenth version of an annual football game.

The rules that don’t vary are: You should buy middleware wherever you can do so without outsourcing your core competency. And to be worth buying, any piece of middleware should behave itself with respect to resource management and concurrency.

An Anatomy of Despair: Managers and Contexts

Many of the design ideas that shaped the Despair Engine were reactions to problems that had arisen in earlier projects that we’d worked on. One of those problems was the question of how to handle subsystem managers.

Many systems naturally lend themselves to a design that gathers the high-level functionality of the system behind a single interface: A FileManager, for example, might expose functions for building a file database from packfiles and loose files. An AudioManager might expose functions for loading and playing sound cues. A SceneManager might expose functions for loading, moving and rendering models.

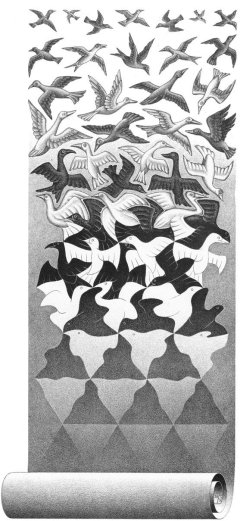

Once upon a time, these objects would all have been global variables. There are a number of problems with using global objects as managers, though, the most critical of which is the uncertainty of static initialization and destruction order. Your managers start out in a pristine garden of Eden, free of all knowledge of good, evil, and (most importantly) one another. But as your game gets more and more complicated, your managers are going to develop more dependencies on one another. To add streaming of sounds to AudioManager, for example, you may need to make it reference FileManager. To stream asynchronously, you may also need to reference AsyncJobManager. But at construction time, you can’t be sure that any of those managers exist yet.

This prompts clever people who’ve read Design Patterns to think, Aha! I’ll make all my managers Meyers singletons! And they go and write something like

FileManager& GetFileManager()

{

static FileManager theFileManager;

return theFileManager;

}

This function will create a FileManager object the first time it’s called and register it for destruction at program exit. There are still a couple of problems with that, though:

- First, like all function-local static variables in C++98, theFileManager is inherently unthreadsafe. If two threads happen to call GetFileManager simultaneously, and theFileManager hasn’t been constructed yet, they’ll both try to construct it. Wackiness ensues. This quibble is due to be fixed in C++0x.

- Imagine what happens if the constructor for AudioManager calls GetFileManager and the constructor for FileManager calls GetAudioManager. To quote chapter and verse of the ISO C++ Standard, “If control re-enters the declaration (recursively) while the [static] object is being initialized, the behavior is undefined.” (ISO Standard 6.7.4) The compiler can do whatever it wants, and whatever it does is unlikely to be what you want.

- Although Meyers singletons give you a JIT safe order of construction, they make no promises about their order of destruction. If AudioManager is destroyed at program exit time, but FileManager wants to call one last function in AudioManager from its destructor, then again you have undefined behavior.

We dealt with those issues in MechAssault 2 by eschewing automatic singletons in favor of explicitly constructed and destroyed singletons. Every manager-type object had an interface that looked something like

class FileManager

{

public:

static bool CreateInstance();

static void DestroyInstance();

static FileManager* GetInstance();

};

This works better than automatic singletons. It worked well enough that we were able to ship a couple of quite successful games with this approach. We wrote a high-level function in the game that constructed all the managers in some order and another high-level function that shut them all down again. This was thread-safe, explicitly ordered and deterministic in its order of destruction.

But as we added more and more library managers, cracks started to show. The main problem was that although we constructed managers in an explicit order, dependencies between managers were still implicit. Suppose, for example, that AudioManager is created before FileManager, but that you’re tasked with scanning the filesystem for audio files at start-up time. That makes AudioManager dependent on FileManager for the first time. Now AudioManager needs to be created after FileManager.

Changing the order in which managers were constructed was always fraught with peril. Finding a new working order was a time-consuming process because ordering failures weren’t apparent at compile time. To catch them, you needed to actually run the game and see what code would crash dereferencing null pointers. And once a new ordering was found, it needed to be propagated to every application that used the reordered managers. Day 1 has always placed a strong emphasis on its content creation tools, and most of those tools link with some subset of the game’s core libraries, so a change might need to be duplicated in six or eight tools, all of which would always compile–but possibly fail at runtime.

With Despair, one of our governing principles has been to make implicit relationships explicit. As applied to system manager construction, that meant that managers would no longer have static GetInstance functions that could be called whether the manager existed or not. Instead, each manager takes pointers to other managers as constructor parameters. As long as you don’t try to pass a manager’s constructor a null pointer (which will assert), any order-of-initialization errors will be compile-time failures. To make sure that managers are also destroyed in a valid order, we use ref-counted smart pointers to refer from one manager to another. As long any manager exists, the managers that it requires will also exist.

Our current code looks something like

class IAudioManager

{

public:

IAudioManagerPtr Create(const IFileManagerPtr& fileMgr);

};

class AudioManager

{

public:

explicit AudioManager(const IFileManagerPtr& fileMgr);

};

One problem remains. Although AudioManager exposes the public interface of the audio library to the rest of the application, there are also private support and utility functions that the audio system should only expose to its own resources. Furthermore, many of these utility functions will probably need access to lower-level library managers.

This could be solved by having global utility functions that take manager references as parameters and by making every audio resource hold onto pointers to every lower-level manager that it might use. But that would bloat our objects and add reference-counting overhead. A more efficient and better-encapsulated solution was to give each library a context object. As Allan Kelly describes in his paper on the Encapsulated Context pattern:

A system contains data that must be generally available to divergent parts of the system but we wish to avoid using long parameter lists to functions or globaldata. Therefore, we place the necessary data in a Context Object and pass this object from function to function.

In our case, that meant wrapping up all smart pointers to lower-level libraries in a single library context, which would in turn be owned by the library manager and (for maximum safety) by all other objects in the library that need to access a lower-level manager. Over time, other private library shared state has crept into our library contexts as well. To maintain a strict ordering, the library manager generally references all the objects that it creates, and they reference the library context, but know nothing about the library manager.

This architecture has generally worked well for us, and has been entirely successful in avoiding the manager order-of-creation and order-of-shutdown issues that plagued earlier games we worked on. It does have definite costs, however:

- A lot of typing. Library managers know about library resource objects which know about library contexts. But frequently there’s some functionality that you’d like to be accessible both to objects outside the library and objects inside the library. In this architecture, there’s nowhere to put that functionality except in the library context, with pass-through functions in the library manager. Since the library manager is usually hidden behind an abstract interface, you can end up adding function declarations to three headers and implementations to two cpp files before you’re done.

- Even more typing. All the lower-level managers held by a library also get passed through a creation function to the library manager’s constructor to the library context’s constructor. That was a mild annoyance when the engine was small and managers were few, but Despair now comprises over fifty libraries. Most of those libraries have managers, and there are a handful of high-level library managers that take almost all lower-level library managers as constructor parameters. Managers that take forty or fifty smart pointers as parameters are hard to create and slow to compile.

- Reference counting woes. In principle, every object should strongly reference its library context to maintain a strict hierarchy of ownership and to make sure that nothing is left accessing freed memory at program shutdown time. In practice, though, this doesn’t work well when objects can be created in multiple threads. Without interlocked operations, your reference counts will get out of sync and eventually your program will probably crash. But with interlocked operations, adding references to library contexts becomes much more expensive, and can become a significant part of your object creation costs. In practice, we’ve ended up using raw pointers to contexts in several libraries where the extra safety wasn’t worth the additional reference cost.

So managers and contexts are far from perfect. They’re just the best way we’ve found so far to stay safe in an ever-more complex virtual world.

An Anatomy of Despair: Aggregation Over Inheritance

One of the first decisions that Adrian and I made in our initial work on Despair was to prefer aggregation to inheritance whenever possible. This is not an original idea. If you Google for “aggregation inheritance” or “composition inheritance,” you’ll get a million hits. The C++ development community has been renouncing its irrational exuberance over inheritance for the last few years now. Sutter and Alexandrescu even include “prefer composition to inheritance” as a guideline in C++ Coding Standards.

Nonetheless, every game engine we’d worked on before Despair had a similar deep inheritance hierarchy of the sort that was in vogue in the mid-nineties: a player class might inherit from some kind of combatant class, which would inherit from a mover class, which would inherit from a physical object class, which would inherit from a base game object class.

Nonetheless, every game engine we’d worked on before Despair had a similar deep inheritance hierarchy of the sort that was in vogue in the mid-nineties: a player class might inherit from some kind of combatant class, which would inherit from a mover class, which would inherit from a physical object class, which would inherit from a base game object class.

This architecture has a lot of shortcomings. Let me enumerate a few of them:

First, it’s inflexible. If you want to create a new AI enemy that has some of the capabilities of enemy A and some of the capabilities of enemy B, that’s not a task that fits naturally into a hierarchical object classification. Ideally, you’d like the designers to be able to create a new enemy type without involving you, the programmer, at all. But with a deep object hierarchy, you have to get involved and you have to try to pick the best implementation from several bad options: to have your new enemy class inherit from one object and cut-and-paste the functions you need from the other; to not inherit from either, and to cut-and-paste the functions you need from both; or to tiptoe down the treacherous slippery slope of multiple inheritance and hope that it doesn’t lead to a diamond of death.

Second, a handful of classes in your hierarchy tend to grow without bound over a game’s development. If the player class is part of the object hierarchy, then you can expect this class to include input and control systems, custom animation controls, pickup and inventory systems, targeting assistance, network synchronization–plus any special systems required by the particular game that you’re making. One previous game that we worked on features a 13,000 line player class implementation, and the player class inherited from a 12,000 physical object class. It’s hard to find anything in files that size, and they’re frequent spots for merge conflicts since everyone’s trying to add new stuff to them all the time.

Third, deep inheritance is poor physical structure. If class A inherits from class B which inherits from class C, then the header file for A–A.h–has to #include B.h and C.h. As your hierarchy gets deeper, you’ll find that all of your leaf classes have to include four or five extra headers for their base classes at different levels. For most modern games, as much compile time is spent opening and reading header files as is spent actually compiling code. The more loosely your code is coupled, the faster you can compile. (See Lakos for more details.)

Therefore we resolved to make Despair as component-based as we could, and to keep our inheritance hierarchies as flat as possible. A game object in Despair, for example, is basically a thin wrapper around a UID and a standard vector of game components. Components can be queried for type information and dynamically cast. The game object provides only lifetime management and identifier scoping. It knows nothing about component implementations. It contains no traditional game object state like position, bounds, or visual representation.

This approach has informed other systems as well. Our scene object implementation is similar to the game object implementation, with a single object representing each model that provides lifetime management for a vector of scene nodes. Scene nodes manage their own hierarchies for render state or skeletal transforms.

Another family of systems is built on a flow-graph library for visual editing. Game logic, animation systems, and materials can all be built by non-programmers wiring together graph components in the appropriate tools.

Using composition instead of inheritance has worked very well for us. Our primary concern when we set out in this new direction was that we’d end up with something that had horrible runtime performance. With Fracture almost complete, though, there’s no evidence that our performance is worse than it would have been with a deep inheritance hierarchy. If anything, I’m inclined to suspect that it’s better, since well-encapsulated components have better cache locality than large objects and since the fact that we only update dirty components each frame means that we can decide what does and doesn’t need to be updated at a finer granularity.

If I were starting over again, the only change I’d make with respect to object composition is to make scene objects more opaque and less like game objects. Scene objects have a different problem to solve. We have several hundred game components now, with more going in all the time, and the flexibility of having a thin game object interface that allows querying components for type has paid big dividends. I think that our game object system is close to ideal for iterating rapidly on gameplay. Scene objects are a different kind of problem, though. We haven’t added any new scene node types since the scene library was written, and all scene nodes are implemented in the scene library instead of in higher-level code. At the same time, it would be nice to be able to experiment with different optimizations of updating skeletal hierarchies without breaking higher-level code. All of this argues that following the Law of Demeter and hiding scene object implementation details would have been appropriate for scene objects even if it wasn’t appropriate in the rapid-prototyping environment of game objects.

Beyond the perfect abstract world of software architecture, component based design also created a couple of surprises in the messier world of development process and human interaction. One lesson of working with a composition-based engine is that the learning curve for new programmers is steeper. For programmers who are used to being able to step through a few big nested functions and see the whole game, it can be disorienting to step through the game and discover that there’s just no there there. For example, Despair contains over 500 classes with the word “component” in their names and 100 classes with the word “object” in their names. Our games aren’t defined by C++ objects, they’re defined by relationships between them. To understand those relationships, you need good documentation and communication more than ever.

Another composition lesson is that component-based design takes a lot of the complexity that used to exist in code and pushes it into data. Designers aren’t used to designing objects and constructing inheritance hierarchies. Working with components requires new processes and good people. Cross-pollination is important. Your programmers need to work building objects in your tools, as well as writing code for components, and they need to work hand-in-hand with good technical designers who can provide tool feedback and build on the object primitives available to them. Like a game, your team isn’t defined by its individual components but by the relationships between them.

An Anatomy of Despair: Orthogonal Views

A frustrating feature of previous game engines we’d used was that they tended to overload hierarchy to mean multiple things.

The engine that we used at Cyan, for example, was a  pure scene graph. Every part of the game was represented by one or more nodes in a hierarchy. But the hierarchy represented logical relationships in some places and kinematic relationships in others. Throughout the graph, ownership was conflated with update order: children would be deleted when their parents were deleted and parents always updated before their children. Kinematic attachment was performed by pruning and grafting trees in the graph, which had the effect of tying the lifetimes of attached objects to the lifetimes of their parents.

pure scene graph. Every part of the game was represented by one or more nodes in a hierarchy. But the hierarchy represented logical relationships in some places and kinematic relationships in others. Throughout the graph, ownership was conflated with update order: children would be deleted when their parents were deleted and parents always updated before their children. Kinematic attachment was performed by pruning and grafting trees in the graph, which had the effect of tying the lifetimes of attached objects to the lifetimes of their parents.

An Anatomy of Despair: Object Ownership

In every game engine that I worked on before Despair, I spent a lot of time tracking down memory leaks. Some leaks were obvious and easy to find. Some leaks involved complex patterns of ownership that thoroughly obscured what object was supposed to be responsible for deleting another. And some leaks involved AddRef/Release mismatches that would create cycles of ownership or that would leak whole object hierarchies.

An Anatomy of Despair: Introduction

I’ve been working for a little more than three years on the Despair Engine, the game engine that Day 1 is using in Fracture and another, as-yet-unannounced, title. In the beginning, there were two of us working on the technology, me and my longtime friend and collaborator Adrian Stone. Now we’ve got thirty programmers working in the same codebase. Fracture’s getting close to shipping. This seems like a good time to look back at the principles that shaped our initial architecture and at the decisions whose consequences we’re living with today.

The name started as a joke. Adrian and I were out to lunch with our lead one day. Somebody made a crack about naming the engine “Despair.” One thing led to another, and by the end of the meal we’d plotted out a whole suite of despair-themed content creation tools, most of which never got made. It was 2004. We were starting from scratch on core technology for big-budget AAA games running on consoles that didn’t even exist yet. We figured that “Despair” would work one way or the other, either as an imperative to our competitors if we succeeded or as a sadly accurate description of our own feelings if we failed.

GDC 2008

This year was the tenth anniversary of my first GDC. It’s milestones like this that make one pause and take stock of one’s life. Over the last decade, one of my college classmates was appointed United States Attorney for South Carolina; another lost a limb fighting in Iraq; another married actor Steve Martin (yes, that Steve Martin). And I… I have spent most of my waking hours contributing, in my own small way, to the perpetual adolescence of the American male.

Like a career in game development, GDC is a mix of small rewards and great frustrations–and yet the two somehow balance each other out. Back in 1998, the attendees were a small brash crowd, excited that gaming had finally arrived and enthusiastic about the future. I think that was the first time somebody announced the oft-repeated canard about how we’re bigger than the movie industry. My friends and I all made the crawl from room to room on Suite Night, availing ourselves of the free drinks and the catered food from the warming trays. There was a party on the Queen Mary. I got a thick pile of t-shirts and an Intel graphics card, and thought, 3dfx better watch out now that Intel’s getting into the market.

Now GDC has become like SIGGRAPH. The crowds are enormous–nearly 15,000 in attendance this year, I’m told. But it’s not the size of the crowds that makes it less intimate. It’s the fact that so many of the people there don’t really have much to say to each other: there are indie game developers, console game developers, serious game developers, mobile phone game developers, people selling middleware and hardware and outsourcing to all of the above, recruiters, wannabes, publishers trying to sign developers, developers looking for a publisher, HR folks looking to hire artists and programmers and musicians, and press trying to cover the whole spectacle. The death of E3 meant that this year there were more sessions than ever that could be summed up as, “look at my game and how awesome it is.” I tried to avoid those. I spent my three days at GDC looking for quiet places to talk to the people who do the same thing I do. I didn’t go to any of the parties. Read the rest of this entry »

WordPress

When I started GameArchitect, the word blog didn’t exist yet, and there was, therefore, a real shortage of decent blogging software. I ended up buying Joel Spolsky’s CityDesk, mostly because it was what he used for Joel on Software and I like the look of his site.

CityDesk hasn’t had a lot of work over the last couple of years, though, and I never have managed to figure out how to make it generate a proper RSS feed. So I’m switching the site over to WordPress, about which I’ve heard good things. All old content is still available at http://www.gamearchitect.net/citydesk.html. Pardon the dust and plaster.